This is a report on the AI/ML (Artificial Intelligence/Machine Learning) Case Study. It includes the following sections: how Synergy utilizes AI/ML to solve complex problems, how to qualify a project to be a good candidate for AI/ML, the platform used, the projects implemented, the training and technical support, the data governance plan, and any lessons learned or experience with similar federal procurements.

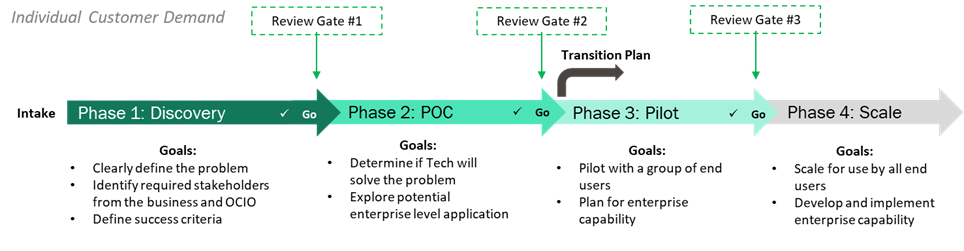

Generally, ET (Emerging Technologies) AI/ML project workloads are brought to the team as demands from customer agency teams with specific business problems that can be solved with advanced technology. ET AI/ML team is considered as the go-to team in DOL for AI based solutioning considering the resources and expertise the team possesses. ET AI/ML team’s project intake incubation process operates in four phases, namely: Discovery, POC (Proof of Concept), Pilot, Production (Scale).

The demand summary information is reviewed by ET AI/ML team in the Discovery phase and a formal meeting with the customer is scheduled to clearly capture the requirements and evaluate their needs. The ET AI/ML team then conducts a thorough exploration of the problem statement and requirements and designs a technical system architecture diagram with AI-based solution options. The proposal is presented to the customer for review and approval. The proposal is also submitted to other key teams such as cloud-ops and security-ops for collaboration.

The next phase is POC wherein a small dataset is collected from the customer to train ML models and conduct initial evaluation in the provisioned POC environment. In this phase, parts of the system architecture are prototyped including the AI service of interest. ML models are trained and tuned to get the best fit. Based on successful initial prototyping results, the customer is shown a working demo and will provide corresponding documentary evidence. The cost model for pilot and production are created for customer’s budget reference.

The Pilot phase requires approval from the customer on the provided cost model, RACI (Responsible, Accountable, Consulted, and Informed) matrix, and proposed system architecture. After the approval process is completed, ET AI/ML team collaborates with cloud-ops team to provision a secure Pilot/Production environment to implement the full solution as per documented implementation plan. This involves security and network configuration as well. The pilot expectations are outlined and delivered as per specification. Unit and User acceptance testing will be completed in this phase. Any change requests will be handled as additional scope of work. Once the pilot period concludes, the entire process is evaluated and checked with Responsible AI frameworks. The pilot report is created and delivered to the customer. The customer also evaluates the process on their end as per their set of test metrics.

The Production/Scale phase involves decision making from the customer to move forward with a fully funded budget and complete system integration with their working environment. The solution will be deployed as per final approved system architecture, and the entire system and ML model monitoring is performed through various channels prominently using the ET AI/ML built Snowflake data warehouse and its business value reporting dashboard feature. Alerts are set in the platform to notify billing/errors. The O&M (Operations and Maintenance) transition plan is implemented to support during the lifecycle.

Below is a flowchart explaining the ET AI/ML incubation process:

Figure 1: AI/ML Process Workflow Methodology

The ET AI/ML workflow process includes delivery of key documentations noted below:

The customer’s problem statement and requirements are evaluated through the project demand intake process. During this period, the provided input and desired output is reviewed and if simple logic design doesn’t solve the problem, a machine learning modelling system could be recommended based on the following:

The customer’s requirements are reviewed with current ET AI/ML offerings and if there is a potential use case, the AI/ML based solution is recommended.

ET AI/ML team is platform agnostic and will recommend a solution from all procured platforms that performs the best and has the least impact on the existing system for that particular use case. Generally, AI-Ops (Artificial Intelligence Operations) workflow often requires system integration with the trained ML model via API (Application Programming Interface) in the customer’s operating environment. After collecting and reviewing requirements, the AI team evaluates the customer’s operating environment and designs the working solution that can seamlessly integrate without compromising on performance. If the project involves exploring new technology, competing platforms are evaluated in the POC phase and the best performing solution/platform is recommended for implementation in the pilot phase.

For example, for a Form Recognizer project, the existing customer system for processing the forms runs using an on-premises IBM DB2 database and a Red Hat Enterprise Linux server that executes Perl scripts. To minimize impacts the customer’s existing system, the ET team wrote extensive Perl code to send forms to the Azure form recognizer API over a private endpoint, process the results, and update the customer’s DB2 database with the data.

Another example of a hybrid design is a transcription solution for converting voicemails to text. For this solution, the customer developed a Java process to convert voicemails from an on-premises IVR solution to a format supported by the cloud AI solution and upload them in batches to cloud storage. The Java process then triggers the AI solution transcribe the voicemails and place the results in another cloud storage location. When the results are available, the Java process downloads the results, adds them to an on-premises DB2 database, and cleans up the files in cloud storage.

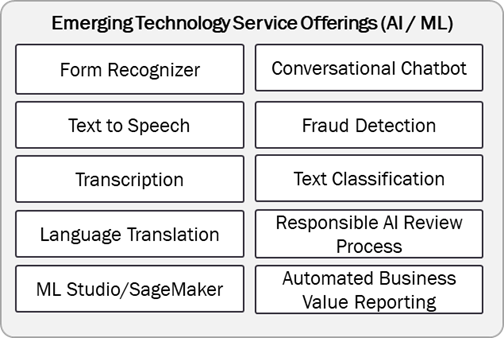

Below are the corresponding AI/ML service offerings that have been implemented and have projects in either Discovery, POC, Pilot, or Production phases.

Figure 2: AI/ML Service Offerings

Both on-prem and cloud-based projects go through the ET AI/ML incubator process outlined in Figure 1. During the requirements gathering process for every project, the implementation plan involves evaluating on-prem vs. cloud-based solution offering. The things to consider are feasibility, system integration, maintenance, costs, oversight, security, performance, etc. Certain solutions require for the platform to have no internet access due to security risks and henceforth solution architecting could involve implementing it on-prem. Performance of this system is limited to compute power, offline ML model availability, updates, etc.

Implementing both on-prem and cloud-based solution will involve thorough evaluation of customer’s requirements and their working environment for system integration. The performance of the various platform’s competing cognitive service offerings is evaluated, and recommendations are provided for implementation. After the review gate and approval from the customer:

For example, consider the Black Lung AI project involving cloud based deployed AI model and local OWCP batch processing servers which are on-prem. This hybrid system architecture involves both on-prem and cloud solutioning. When the input files are moved to the on-prem landing zone in the batch server system, it triggers API call to cloud-based deployed AI model and gets the prediction results back to the on-prem server which will perform post-processing using AI techniques/algorithms before finally completing the review process.

Our Emerging Technologies Practice’s AI/ML team is consistently engaged in exploring emerging technologies to address business challenges and accomplish the objectives of the Department of Labor (DOL). In situations where project requirements necessitate it, the exploration and training process is initiated to formulate innovative solutions. This entails participation in webinars, demonstrations showcasing new products or features, utilization of training materials, and engagement in coursework provided by platform vendors. In instances where technological constraints pose obstacles, collaboration with the technical support team of the platform provider is pursued to solicit guidance and facilitate joint problem-solving.

Upon successful implementation of the solution in alignment with the designated system architecture, the customer will proceed through the O&M (Operations and Maintenance) plan orchestrated by the ET AI/ML team. This comprehensive plan encompasses various stages including product demonstration, provisioning of user guides, and the documentation of transition strategies.

Throughout the O&M phase after the release of the production version, customers have the prerogative to initiate a service ticket with the ET AI/ML team for the purpose of resolving any encountered issues. Concurrently, as an integral facet of the O&M plan, the ET AI/ML team undertakes periodic updates to both the platform and AI models. These updates are conducted to ensure the sustained functionality of operational versions and form a crucial part of the ongoing maintenance strategy.

The data governance strategy employed by the ET AI/ML team revolves around the implementation of AI-driven solutions predominantly leveraging Snowflake’s cloud-based data warehousing infrastructure. Initiatives encompassing cognitive services intertwined with data analytics and insights encompass a diverse array of structured and unstructured data categories. These data subsets encompass resources employed for training custom machine learning models, log data originating from each API interaction with the model, metrics pertaining to model performance, resource consumption encompassing CPU/GPU usage, error tracking, billing particulars, return on investment calculations, assessments of model drift, metrics relevant to responsible AI, and similar.

The data emanating from all active projects, which amount to millions of transactions, is securely housed within the centralized framework of Snowflake’s data warehouse. Regularly executed scripts facilitate the updating of data tables, ensuring the accuracy and relevance of information. To accommodate the requisite analytics, the computational instances can be seamlessly scaled as per demand.

Significant statistical computations on the gathered data are effortlessly executed via Snowsight, which serves as Snowflake’s web interface driven by SQL. Optimal computational resources are allocated to access the dataset, formulate and execute queries, load data into tables, and carry out intricate calculations.

The collective data, encompassing both program and project-specific information along with Key Performance Indicators (KPIs), undergoes systematic calculations. These calculated metrics are then visually presented through the capabilities of Snowsight’s dashboarding feature. The analytics reporting dashboard serves as a comprehensive tracking mechanism for a multitude of critical insights derived from the data. This dashboard can be readily shared with organizational leaders through the platform.

This functionality empowers both the DOL agency customers and leadership to gain a visual representation of the tangible benefits conferred by the implemented solutions, which inherently contribute to the advancement of American workers and job seekers—a pivotal mission of the DOL.

Furthermore, the implementation of a Responsible AI framework remains a central focus in all AI/ML projects. Metrics such as demographic parity data are meticulously checked to ensure comprehensive oversight and adherence to ethical AI principles.

The following encapsulates key takeaways and insights derived from earlier experiences:

By assimilating these learned insights, a more informed and strategic approach can be adopted in future federal procurements with similar requirements.

While designing the system architecture and working solution for a particular use case project, various solutions are considered including the use of open-source technologies to fulfill the project requirements and mission statement. The use of open-source technology depends on factors such as FedRAMP status, system integration capability, performance, and reviewing security-related issues and getting approval from security operations team. Other things to consider is the fact that AI-Ops solution can involve several processing blocks and can be a mixture of open source and commercial products.

For example, consider the voicemail transcription project involving AI-based cognitive service to convert speech to text. The input file is not an accepted wav format and an open-source technology FFMPEG was used in the AI-Ops flow to convert it to wav file. This required thorough security evaluation for production release. Another usage of open-source example involves Hugging Face natural language processing models in SageMaker platform for custom training for claims review use case.